StableSwarmUI 0.5.9 Alpha 👍

A Modular Stable Diffusion Web-User-Interface, with an emphasis on making powertools easily accessible, high performance, and extensibility.

Dieses Projekt wurde mit einem C#-Backend-Server erstellt, um die Leistung zu maximieren und gleichzeitig die Codekomplexität nur minimal zu erhöhen. Während die meisten ML-Projekte in der Regel in Python geschrieben werden, reicht diese Sprache einfach nicht aus, um die Leistungsziele dieses Projekts* zu erreichen (d. h. um einen sehr schnellen und reaktionsfähigen, für mehrere Benutzer geeigneten Multi-Backend-Dienst bereitzustellen), insbesondere fehlt ihr „true“. „Multithreading-Fähigkeiten (aufgrund von Python GIL), die für StableSwarmUI als dringend notwendig erachtet wurden (es muss in der Lage sein, verfügbare CPU-Kerne zu nutzen und gleichzeitig Benutzeranfragen zu bedienen und interne Daten zu verwalten, um so schnell wie möglich auf alle Anfragen reagieren zu können).

https://github.com/Stability-AI/StableSwarmUI

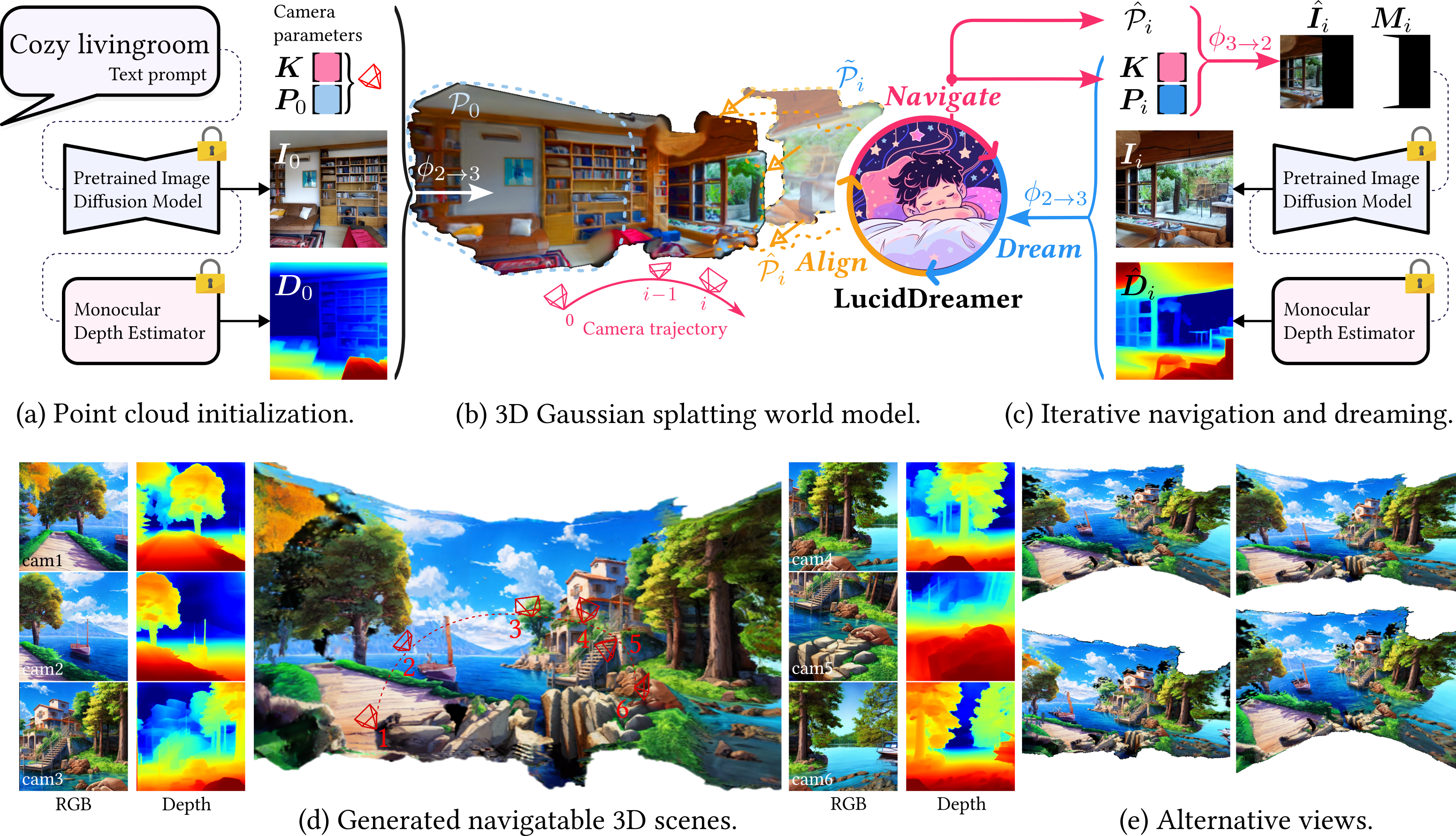

LucidDreamer: Towards High-Fidelity Text-to-3D Generation via Interval Score Matching

This live demo allows you to generate high-quality 3D content using text prompts. The outputs are 360° rendered 3d gaussian video and training progress visualization.

https://huggingface.co/spaces/haodongli/LucidDreamer

Civitai SDXL Safetensor Cog template

https://github.com/lucataco/cog-civitai-sdxl-template

attempt to use TensorRT with ComfyUI

best suited for RTX 20xx-30xx-40xx

https://github.com/phineas-pta/comfy-trt-test

SD-Turbo Model Card

SD-Turbo is a fast generative text-to-image model that can synthesize photorealistic images from a text prompt in a single network evaluation. We release SD-Turbo as a research artifact, and to study small, distilled text-to-image models. For increased quality and prompt understanding, we recommend SDXL-Turbo.

https://huggingface.co/stabilityai/sd-turbo

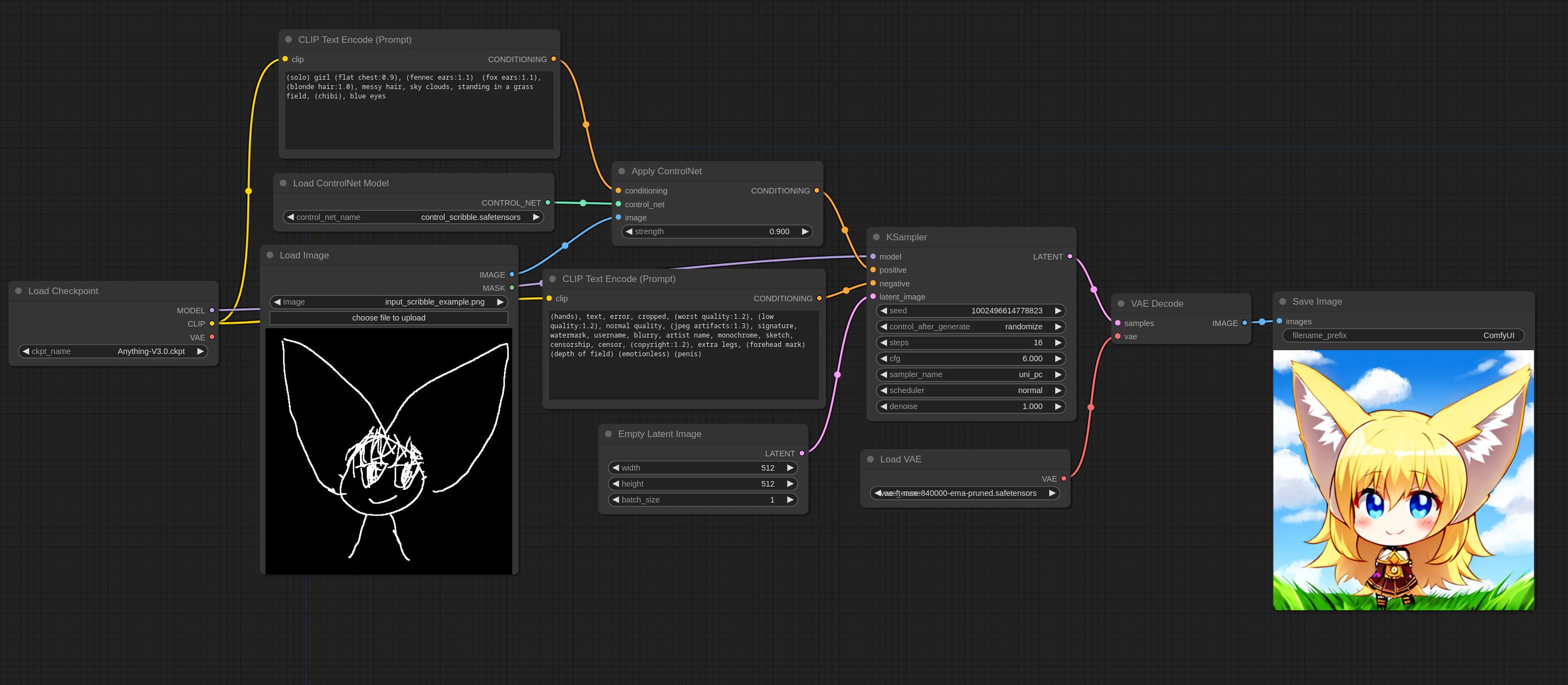

ComfyUI Custom Nodes

Custom nodes that extend the capabilities of ComfyUI

https://github.com/AlekPet/ComfyUI_Custom_Nodes_AlekPet

ComfyUI_stable_fast

Experimental usage of stable-fast and TensorRT.

https://github.com/gameltb/ComfyUI_stable_fast

MagicAnimate: Temporally Consistent Human Image Animation using Diffusion Model

Release inference code and gradio demo. We are working to improve MagicAnimate, stay tuned!

https://github.com/magic-research/magic-animate

https://huggingface.co/spaces/zcxu-eric/magicanimate

MotionDirector: Motion Customization of Text-to-Video Diffusion Models

Large-scale pre-trained diffusion models have exhibited remarkable capabilities in diverse video generations. Given a set of video clips of the same motion concept, the task of Motion Customization is to adapt existing text-to-video diffusion models to generate videos with this motion. For example, generating a video with a car moving in a prescribed manner under specific camera movements to make a movie, or a video illustrating how a bear would lift weights to inspire creators.

Introducing SDXL Turbo: A Real-Time Text-to-Image Generation Model

SDXL Turbo achieves state-of-the-art performance with a new distillation technology, enabling single-step image generation with unprecedented quality, reducing the required step count from 50 to just one.

See our research paper for specific technical details regarding the model’s new distillation technique that leverages a combination of adversarial training and score distillation.

Download the model weights and code on Hugging Face, currently being released under a non-commercial research license that permits personal, non-commercial use.

Test SDXL Turbo on Stability AI’s image editing platform Clipdrop, with a beta demonstration of the real-time text-to-image generation capabilities.

https://stability.ai/news/stability-ai-sdxl-turbo

ComfyUI-Video-Matting

A minimalistic implementation of Robust Video Matting (RVM) in ComfyUI

https://github.com/Fannovel16/ComfyUI-Video-Matting

Goodbye cold boot — how we made LoRA Inference 300% faster

We swap the Stable Diffusion LoRA adapters per user request, while keeping the base model warm allowing fast LoRA inference across multiple users. You can experience this by browsing our LoRA catalogue and playing with the inference widget.

https://huggingface.co/blog/lora-adapters-dynamic-loading

😴 LucidDreamer: Domain-free Generation of 3D Gaussian Splatting Scenes

https://github.com/luciddreamer-cvlab/LucidDreamer

Turn your ideas into emojis in seconds. Generate your favorite Slack emojis with just one click.

Fooocus is a rethinking of Stable Diffusion and Midjourney’s designs

https://github.com/lllyasviel/Fooocus

ReActor for Stable Diffusion

The Fast and Simple FaceSwap Extension with a lot of improvements and without NSFW filter (uncensored, use it on your own responsibility)

https://github.com/Gourieff/sd-webui-reactor

wyrde-comfyui-workflows

some wyrde workflows for comfyUI

https://github.com/wyrde/wyrde-comfyui-workflows

Custom Nodes, Extensions, and Tools for ComfyUI

https://github.com/WASasquatch/comfyui-plugins

sd-webui-comfyui

sd-webui-comfyui is an extension for A1111 webui that embeds ComfyUI workflows in different sections of the normal pipeline of the webui. This allows to create ComfyUI nodes that interact directly with some parts of the webui’s normal pipeline.

https://github.com/ModelSurge/sd-webui-comfyui

ComfyBox

ComfyBox is a frontend to Stable Diffusion that lets you create custom image generation interfaces without any code. It uses ComfyUI under the hood for maximum power and extensibility.

https://github.com/space-nuko/ComfyBox

DragGAN

Drag Your GAN: Interactive Point-based Manipulation on the Generative Image Manifold

https://github.com/XingangPan/DragGAN

StyleGAN3

We observe that despite their hierarchical convolutional nature, the synthesis process of typical generative adversarial networks depends on absolute pixel coordinates in an unhealthy manner. This manifests itself as, e.g., detail appearing to be glued to image coordinates instead of the surfaces of depicted objects. We trace the root cause to careless signal processing that causes aliasing in the generator network. Interpreting all signals in the network as continuous, we derive generally applicable, small architectural changes that guarantee that unwanted information cannot leak into the hierarchical synthesis process. The resulting networks match the FID of StyleGAN2 but differ dramatically in their internal representations, and they are fully equivariant to translation and rotation even at subpixel scales. Our results pave the way for generative models better suited for video and animation.

https://github.com/NVlabs/stylegan3

ComfyUI ExLlama Nodes

https://github.com/Zuellni/ComfyUI-ExLlama-Nodes

Generative AI for Krita

Generate images from within Krita with minimal fuss: Select an area, push a button, and new content that matches your image will be generated. Or expand your canvas and fill new areas with generated content that blends right in. Text prompts are optional. No tweaking required!

Local. Open source. Free.

https://github.com/Acly/krita-ai-diffusion

ai-generated-qr-codes-with-stable-diffusion

DiffBIR: Towards Blind Image Restoration with Generative Diffusion Prior

https://github.com/XPixelGroup/DiffBIR

Inference of Stable Diffusion in pure C/C++

Plain C/C++ implementation based on ggml, working in the same way as llama.cpp

16-bit, 32-bit float support

4‑bit, 5‑bit and 8‑bit integer quantization support

https://github.com/leejet/stable-diffusion.cpp

FaceFusion

Next generation face swapper and enhancer.

https://github.com/facefusion/facefusion

ComfyUI

The most powerful and modular stable diffusion GUI and backend.

https://github.com/comfyanonymous/ComfyUI

ComfyUI Examples

This repo contains examples of what is achievable with ComfyUI. All the images in this repo contain metadata which means they can be loaded into ComfyUI with the Load button (or dragged onto the window) to get the full workflow that was used to create the image.

https://comfyanonymous.github.io/ComfyUI_examples/

WAS Node Suite

A node suite for ComfyUI with many new nodes, such as image processing, text processing, and more.

https://github.com/WASasquatch/was-node-suite-comfyui

A1111-sd-zoe-depth

https://github.com/sanmeow/a1111-sd-zoe-depth

ResShift: Efficient Diffusion Model for Image Super-resolution by Residual Shifting

Diffusion-based image super-resolution (SR) methods are mainly limited by the low inference speed due to the requirements of hundreds or even thousands of sampling steps. Existing acceleration sampling techniques inevitably sacrifice performance to some extent, leading to over-blurry SR results. To address this issue, we propose a novel and efficient diffusion model for SR that significantly reduces the number of diffusion steps, thereby eliminating the need for post-acceleration during inference and its associated performance deterioration.

https://github.com/zsyOAOA/ResShift

ControlNet and T2I-Adapter Examples

Each ControlNet/T2I adapter needs the image that is passed to it to be in a specific format like depthmaps, canny maps and so on depending on the specific model if you want good results.

https://comfyanonymous.github.io/ComfyUI_examples/controlnet/

InvokeAI

InvokeAI is a leading creative engine for Stable Diffusion models, empowering professionals, artists, and enthusiasts to generate and create visual media using the latest AI-driven technologies. The solution offers an industry leading WebUI, supports terminal use through a CLI, and serves as the foundation for multiple commercial products.

https://github.com/invoke-ai/InvokeAI

Auto-Photoshop-StableDiffusion-Plugin

With Auto-Photoshop-StableDiffusion-Plugin, you can directly use the capabilities of Automatic1111 Stable Diffusion in Photoshop without switching between programs. This allows you to easily use Stable Diffusion AI in a familiar environment. You can edit your Stable Diffusion image with all your favorite tools and save it right in Photoshop.

DWPose: New pose detection method

https://github.com/IDEA-Research/DWPose

Installation https://github.com/IDEA-Research/DWPose/blob/main/INSTALL.md

How to Download FFmpeg

Written Tutorial https://www.nextdiffusion.ai

63 Photographers to use in SDXL prompts for AI art

AI generated images to provide some inspiration for prompts in Stable Diffusion XL 1.0; every image was created with the prompt “Dragon on beach by [Photographer Name]”. Photographer Names were rejected from inclusion if they didn’t provide results that were good quality (not too much weird anatomy), weren’t distinct in style from others included, or if they provided NSFW results.

stable-diffusion-webui-vectorstudio

Adds Javascript-SVG-Editor (SVG-Edit) as a tab to Stable-Diffusion-Webui Automatic 1111.

Adds an interactive vectorizer (monochrome and color: “SVGCode” as a further tab

Adds postprocessing using POTRACE — executable to mass convert you prompts from png to svg.

Intel® Embree

is a high-performance ray tracing library developed at Intel which supports x86 CPUs under Linux, macOS, and Windows; ARM CPUs on macOS; as well as Intel® Arc™ GPUs under Linux and Windows.

https://github.com/embree/embree

Temporally Coherent Stable Diffusion Videos via a Video Codec Approach

New research from China has used the well-established precepts of video frame encoding as a central approach to a new method for creating Stable Diffusion videos that are temporally consistent (i.e., that do not show jarring changes throughout the video).

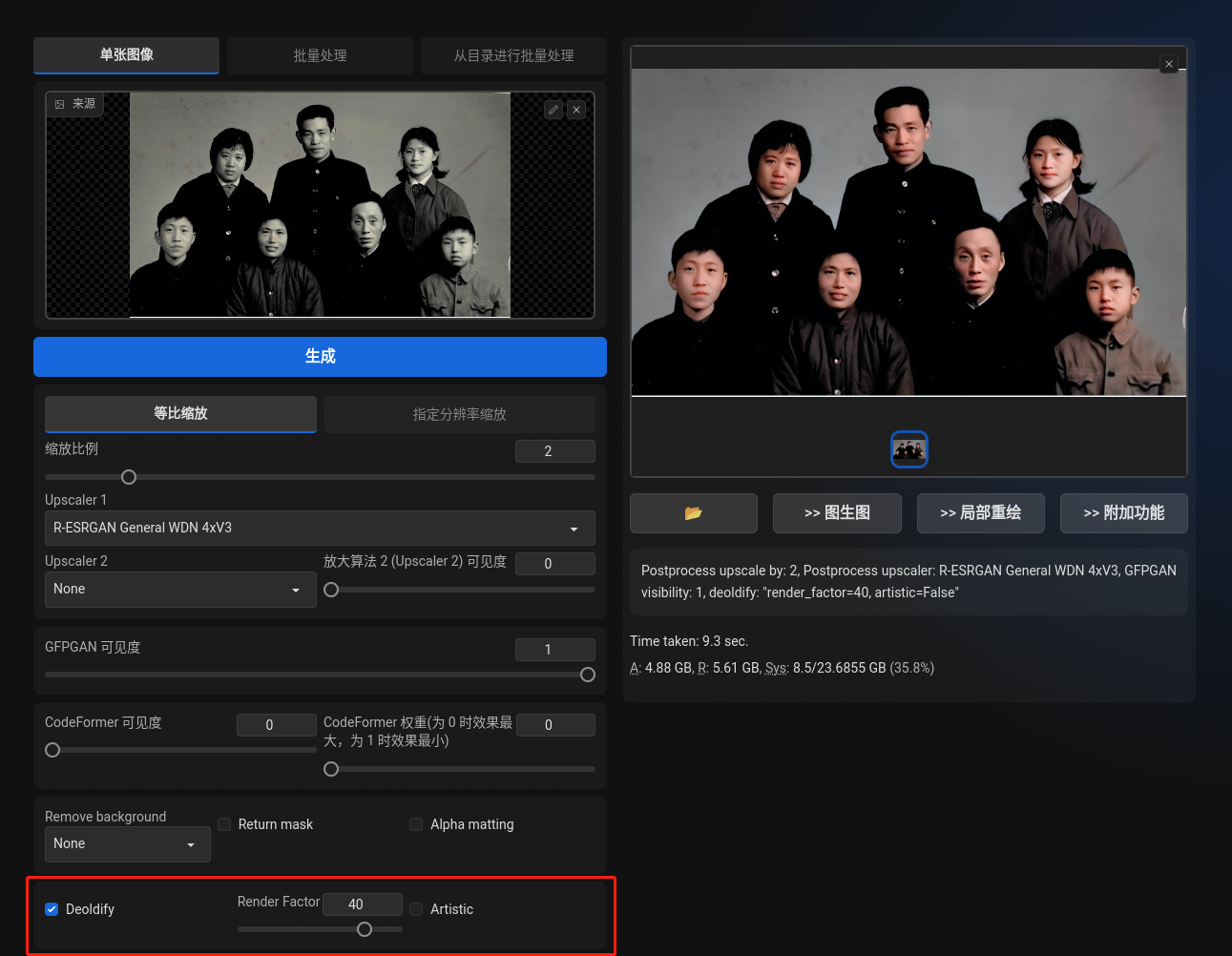

DeOldify for Stable Diffusion WebUI

This is an extension for StableDiffusion’s AUTOMATIC1111 web-ui that allows colorize of old photos. It is based on deoldify.

https://github.com/SpenserCai/sd-webui-deoldify

Papercut_SDXL

.webp)

https://huggingface.co/TheLastBen/Papercut_SDXL

Stable Diffusion Evaluation

This HuggingFace Space lets you compare Stable-Diffusion V1.5 vs SDXL image quality.

https://huggingface.co/spaces/qblocks/Monster-SD

Leave a reply